CUDA系列学习(五)GPU基础算法: Reduce, Scan, Histogram

1. Task complexity

task complexity包括step complexity(可以并行成几个操作) & work complexity(总共有多少个工作要做)。

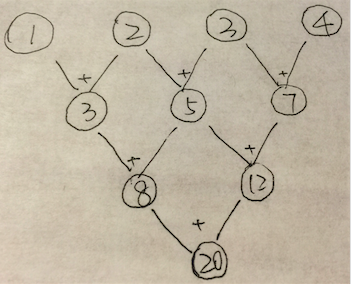

e.g. 下面的tree-structure图中每一个节点表示1个操作数,每条边表示1个操作,同层edge表示相同操作,问该图表示的task的step complexity & work complexity分别是多少。

Ans:

step complexity: 3;

work complexity: 6。

下面会有更具体的例子。

### 2.1 what is reduce? Reduce input: 1. set of elements 2. reduction operation 1. binary: 两个输入1个输出 2. 操作满足结合律: (a@b)@c = a@(b@c), 其中@表示operator e.g +, 按位与 都符合;a^b(expotentiation)和减法都不是

### 2.1.1 Serial implementation of Reduce: reduce的每步操作都依赖于其前1个操作的结果。比如对前面那个例子,n个数相加,work complexity 和 step complexity都是O(n)(缘由不言自明吧~)我们的目标就是并行化操作,降下来step complexity. e.g add serial reduce -> parallel reduce。

### 2.1.2 Parallel implementation of Reduce:  也就是说,我们把step complexity降到了

__global__ void parallel_reduce_kernel(float *d_out, float* d_in){

int myID = threadIdx.x + blockIdx.x * blockDim.x;

int tid = threadIdx.x;

//divide threads into two parts according to threadID, and add the right part to the left one, lead to reducing half elements, called an iteration; iterate until left only one element

for(unsigned int s = blockDim.x / 2 ; s>0; s>>1){

if(tid<s){

d_in[myID] += d_in[myID + s];

}

__syncthreads(); //ensure all adds at one iteration are done

}

if (tid == 0){

d_out[blockIdx.x] = d_in[myId];

}

}Quiz: 看1下上面的code可以从哪里进行优化?

Ans:我们在上1讲中提到了global,shared & local memory的速度,那末这里对global memory的操作可以更改成shared memory,从而进行提速:

__global__ void parallel_shared_reduce_kernel(float *d_out, float* d_in){

int myID = threadIdx.x + blockIdx.x * blockDim.x;

int tid = threadIdx.x;

extern __shared__ float sdata[];

sdata[tid] = d_in[myID];

__syncthreads();

//divide threads into two parts according to threadID, and add the right part to the left one, lead to reducing half elements, called an iteration; iterate until left only one element

for(unsigned int s = blockDim.x / 2 ; s>0; s>>1){

if(tid<s){

sdata[myID] += sdata[myID + s];

}

__syncthreads(); //ensure all adds at one iteration are done

}

if (tid == 0){

d_out[blockIdx.x] = sdata[myId];

}

}优化的代码中还有1点要注意,就是声明的时候记得我们第3讲中说过的kernel通用表示情势:

kernel<<<grid of blocks, block of threads, shmem>>>parallel_reduce_kernel<<<blocks, threads, threads*sizeof(float)>>>(data_out, data_in);好,那末问题来了,对这两个版本(parallel_reduce_kernel 和 parallel_shared_reduce_kernel), parallel_reduce_kernel比parallel_shared_reduce_kernel多用了几倍的global memory带宽? Ans: 分别斟酌两个版本的读写操作:

parallel_reduce_kernel| Times | Read Ops | Write Ops |

| 1 | 1024 | 512 |

| 2 | 512 | 256 |

| 3 | 256 | 128 |

| … | ||

| n | 1 | 1 |

parallel_shared_reduce_kernel| Times | Read Ops | Write Ops |

| 1 | 1024 | 1 |

所以,parallel_reduce_kernel所需的带宽是parallel_shared_reduce_kernel的3倍。

3. Scan

3.1 what is scan?

Example:

- input: 1,2,3,4

- operation: Add

- ouput: 1,3,6,10(out[i]=sum(in[0:i]))

目的:解决难以并行的问题

拍拍脑袋想一想上面这个问题O(n)的1个解法是out[i] = out[i⑴] + in[i].下面我们来引入scan。

Inputs to scan:

- input array

- 操作:binary & 满足结合律(和reduce1样)

- identity element [I op a = a], 其中I 是identity element

quiz: what is the identity for 加法,乘法,逻辑与,逻辑或?

Ans:

| op | Identity |

| 加法 | 0 |

| 乘法 | 1 |

| 逻辑或|| | False |

| 逻辑与&& | True |

3.2 what scan does?

| I/O | content | ||||

|---|---|---|---|---|---|

| input | [ |

… | |||

| output | [ |

… |

其中

3.2.1 Serial implementation of Scan

很简单:

int acc = identity;

for(i=0;i<elements.length();i++){

acc = acc op elements[i];

out[i] = acc;

}work complexity:

step complexity:

那末,对scan问题,我们怎样对其进行并行化呢?

3.2.1 Parallel implementation of Scan

斟酌scan的并行化,可以并行计算n个output,每一个output元素i相当于

Q: 那末问题的work complexity和step complexity分别变成多少了呢?

Ans:

- step complexity:

取决于n个reduction中耗时最长的,即O(log2n) - work complexity:

对每一个output元素进行计算,总计算量为0+1+2+…+(n⑴),所以复杂度为O(n2) .

可见,step complexity降下来了,惋惜work complexity上去了,那末怎样解决呢?这里有两种Scan算法:

| more step efficiency | more work efficiency | |

| hillis + steele (1986) | √ | |

| blelloch (1990) | √ |

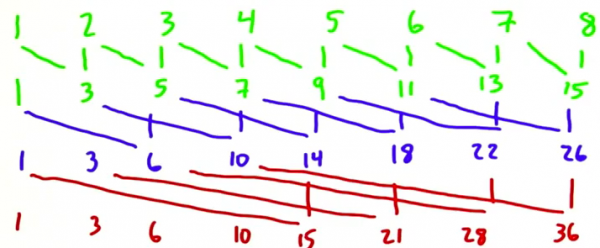

- Hillis + Steele

对Scan加法问题,hillis+steele算法的解决方案以下:

即streaming’s

step 0: out[i] = in[i] + in[i⑴];

step 1: out[i] = in[i] + in[i⑵];

step 2: out[i] = in[i] + in[i⑷];

如果元素不存在(向下越界)就记为0;可见step 2的output就是scan 加法的结果(想一想为何,我们1会再分析)。

那末问题来了。。。

Q: hillis + steele算法的work complexity 和 step complexity分别为多少?

| O(n^2) | |||||

| work complexity | √ | ||||

| step complexity | √ |

解释:

为了无妨碍大家思路,我在表格中将答案设为了白色,选中表格可见答案。

- step complexity:

由于第i个step的结果为上1步输出作为in, out[idx] = in[idx] + in[idx - 2^i], 所以step complexity =O(log(n)) - work complexity:

workload =(n?1)+(n?2)+(n?4)+... ,共有log(n) 项元素相加,所以可以近似看作1个矩阵,对应上图,长log(n) , 宽n,所以复杂度为nlog(